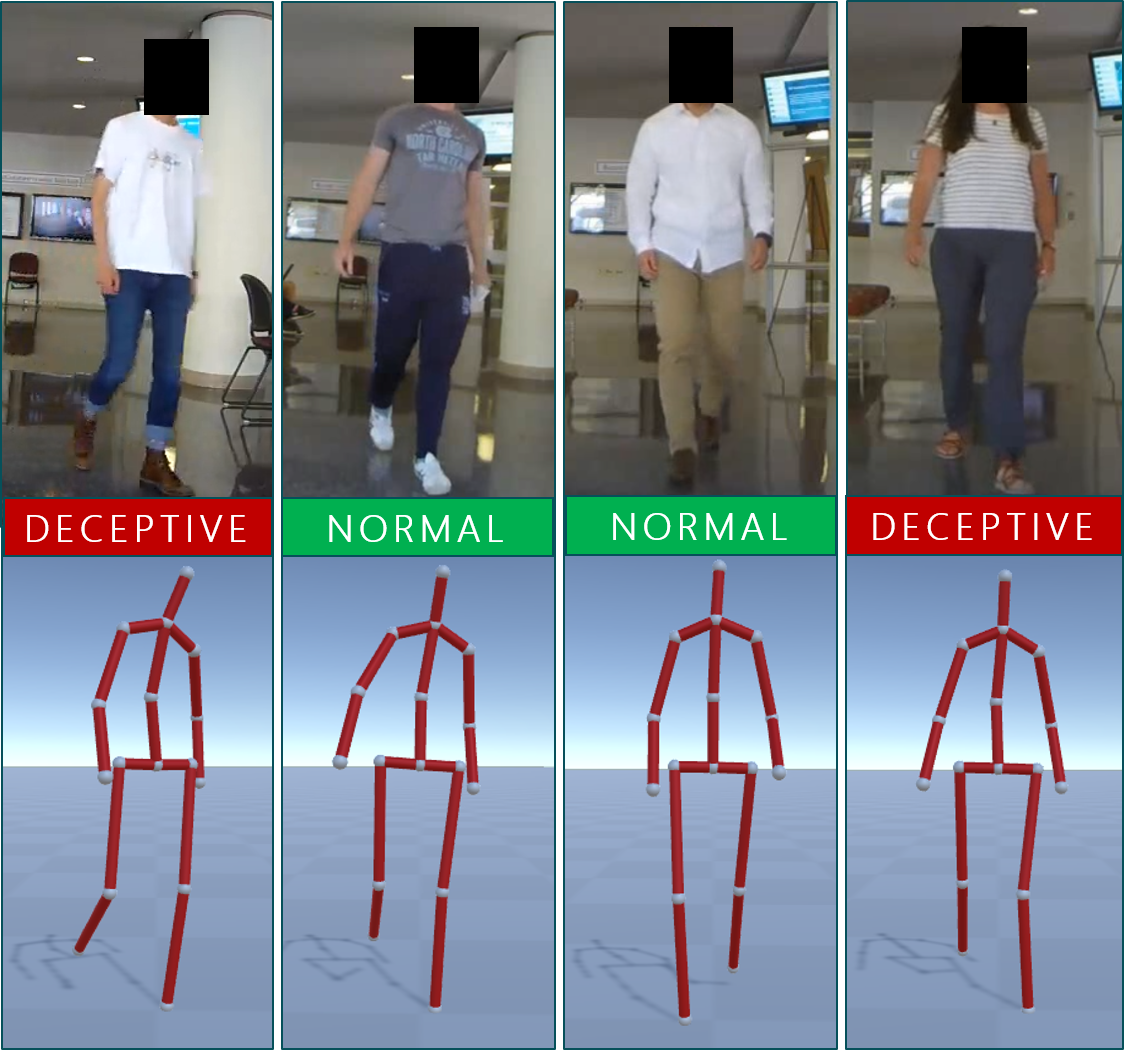

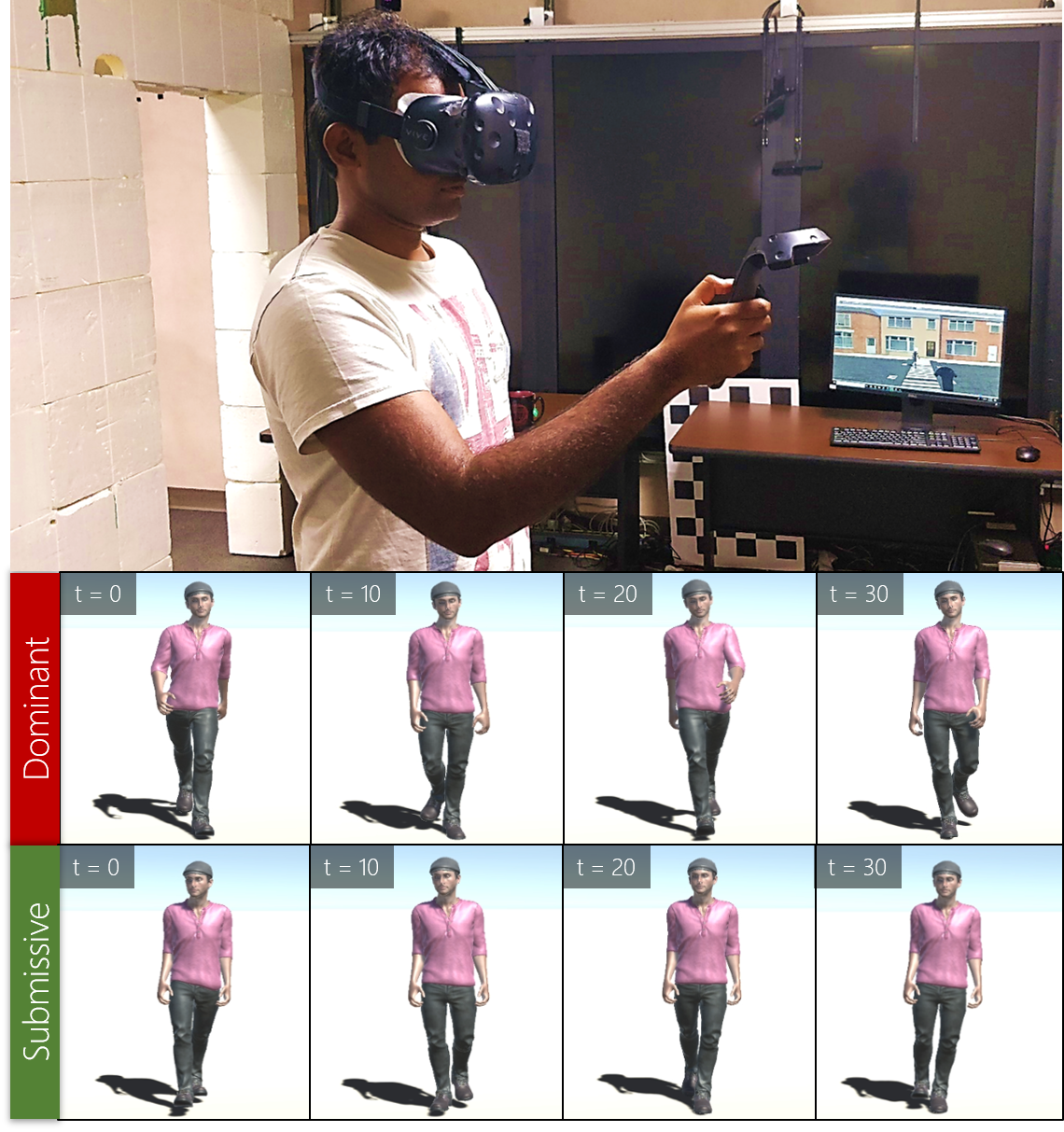

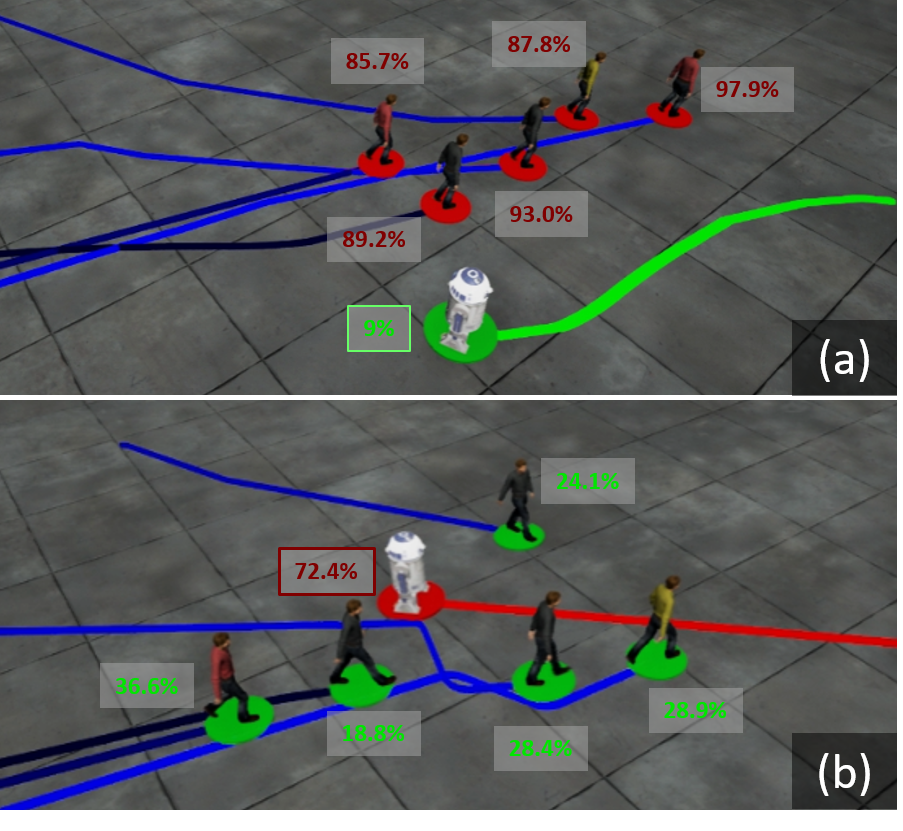

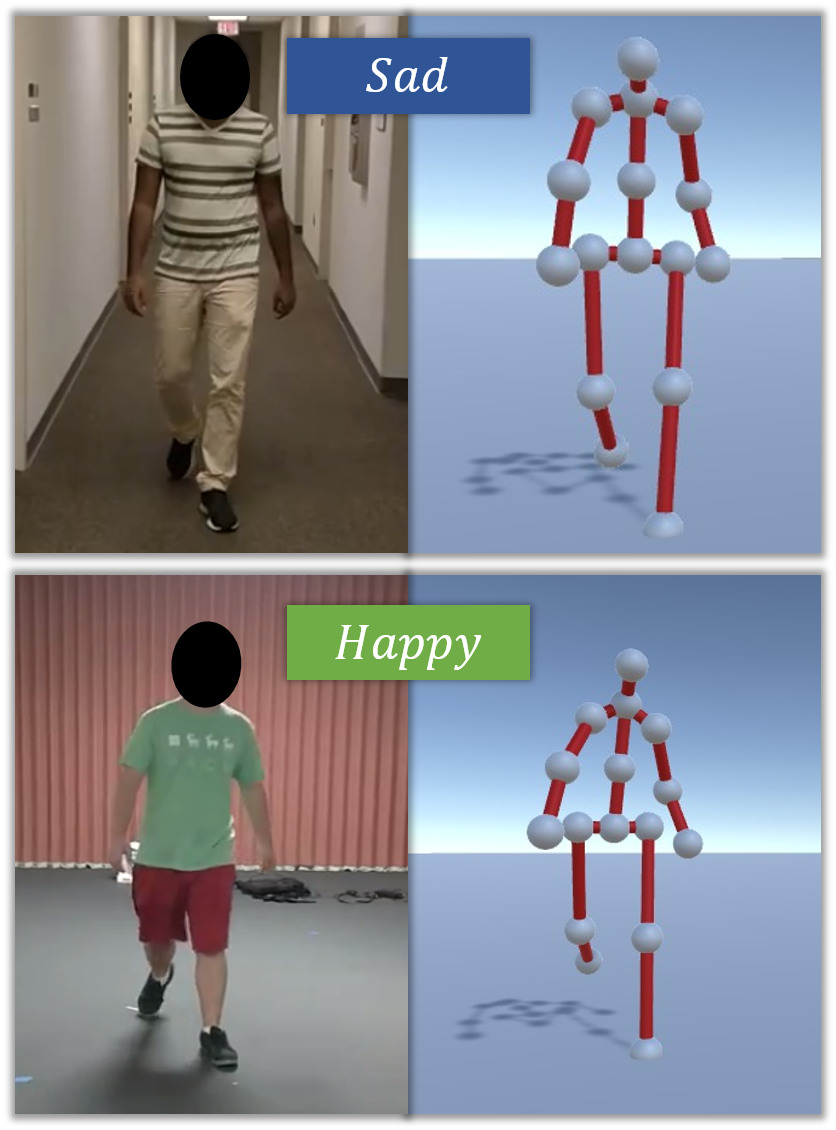

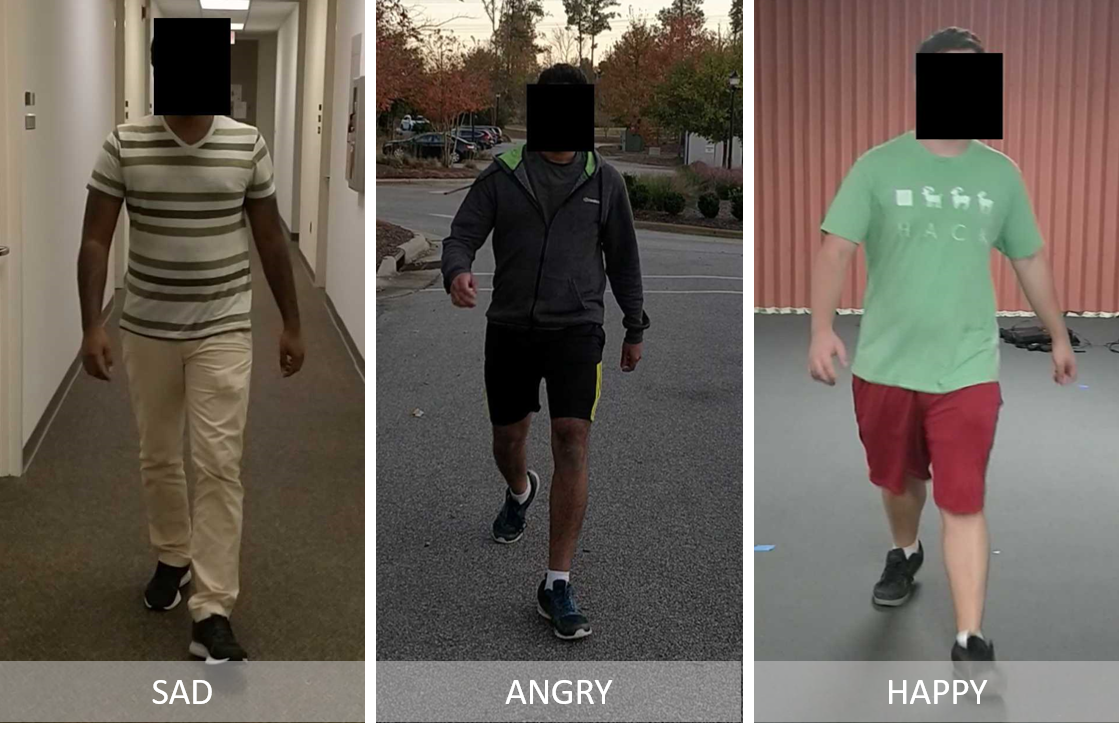

Detecting Deception with Gait and Gesture

We present a data-driven deep neural algorithm for detecting deceptive walking behavior using nonverbal cues like gaits and gestures. Using gait and gesture data from a novel DeceptiveWalk dataset, we train an LSTM-based deep neural network to obtain deep features. We use a combination of psychology-based gait, gesture, and deep features to detect deceptive walking with an accuracy of 88.41%. To the best of our knowledge, ours is the first algorithm to detect deceptive behavior using non-verbal cues of gait and gesture.

Randhavane, T., Bhattacharya, U., Kapsaskis, K., Gray, K., Bera, A., & Manocha, D. (2020). The Liar's Walk: Detecting Deception with Gait and Gesture PDF Video (MP4, 81.6 MB)