Learning-based Cloth Material Recovery from Video

ABSTRACT

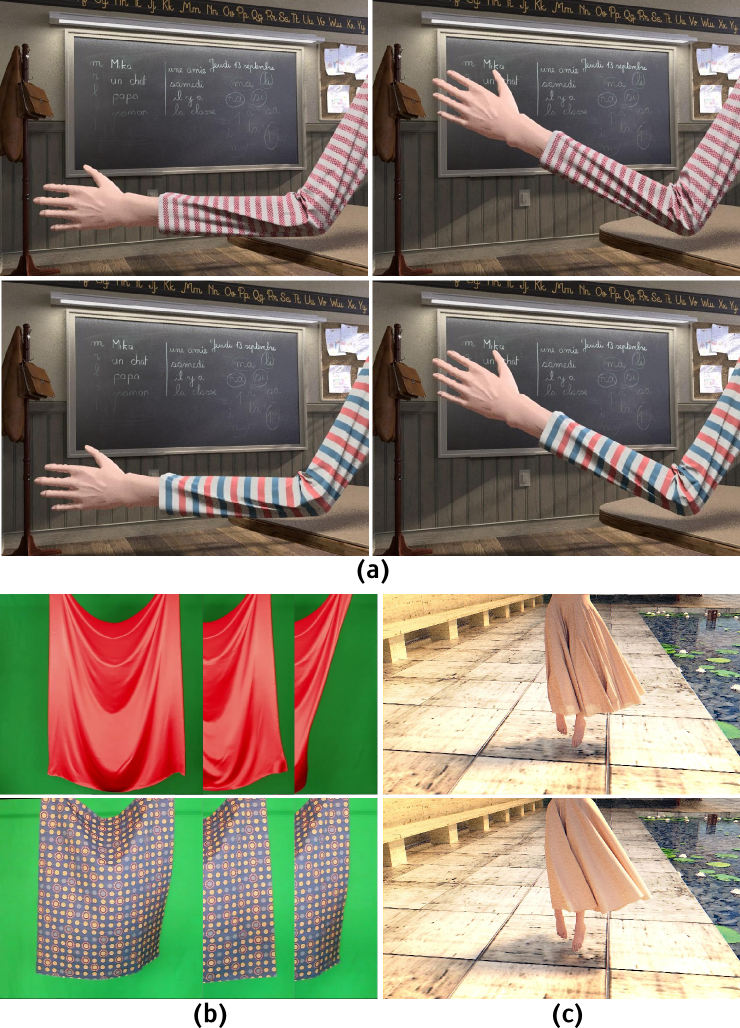

Image and video understanding enables better reconstruction of the physical world. Existing methods focus largely on geometry and visual appearance of the reconstructed scene. In this paper, we extend the frontier in image understanding and present a method to recover the material properties of cloth from a video. Previous cloth material recovery methods often require markers or complex experimental set-up to acquire physical properties, or are limited to certain types of images or videos. Our approach takes advantages of the appearance changes of the moving cloth to infer its physical properties. To extract information about the cloth, our method characterizes both the motion and the visual appearance of the cloth geometry. We apply the Convolutional Neural Network (CNN) and the Long Short Term Memory (LSTM) neural network to material recovery of cloth from videos. We also exploit simulated data to help statistical learning of mapping between the visual appearance and material type of the cloth. The effectiveness of our method is demonstrated via validation using both the simulated datasets and the realife recorded videos.

PUBLICATION

Learning-based Cloth Material Recovery from Video

International Conference on Computer Vision 2017

Shan Yang, Junbang Liang, and Ming C. Lin

DATA DOWNLOAD

Data generation code Training code and trained params

| Pose | Wind | Arm |

|---|---|---|

| 0 | wind_pose0(7GB) | arm_pose0(8GB) |

| 1 | wind_pose1(14GB) | arm_pose1(8GB) |