SynCoPation: Interactive Synthesis-Coupled Sound Propagation

Atul Rungta

Carl Schissler

Ravish Mehra

Chris Malloy

Ming Lin

Dinesh Manocha

University of North Carolina at Chapel Hill

|

Abstract

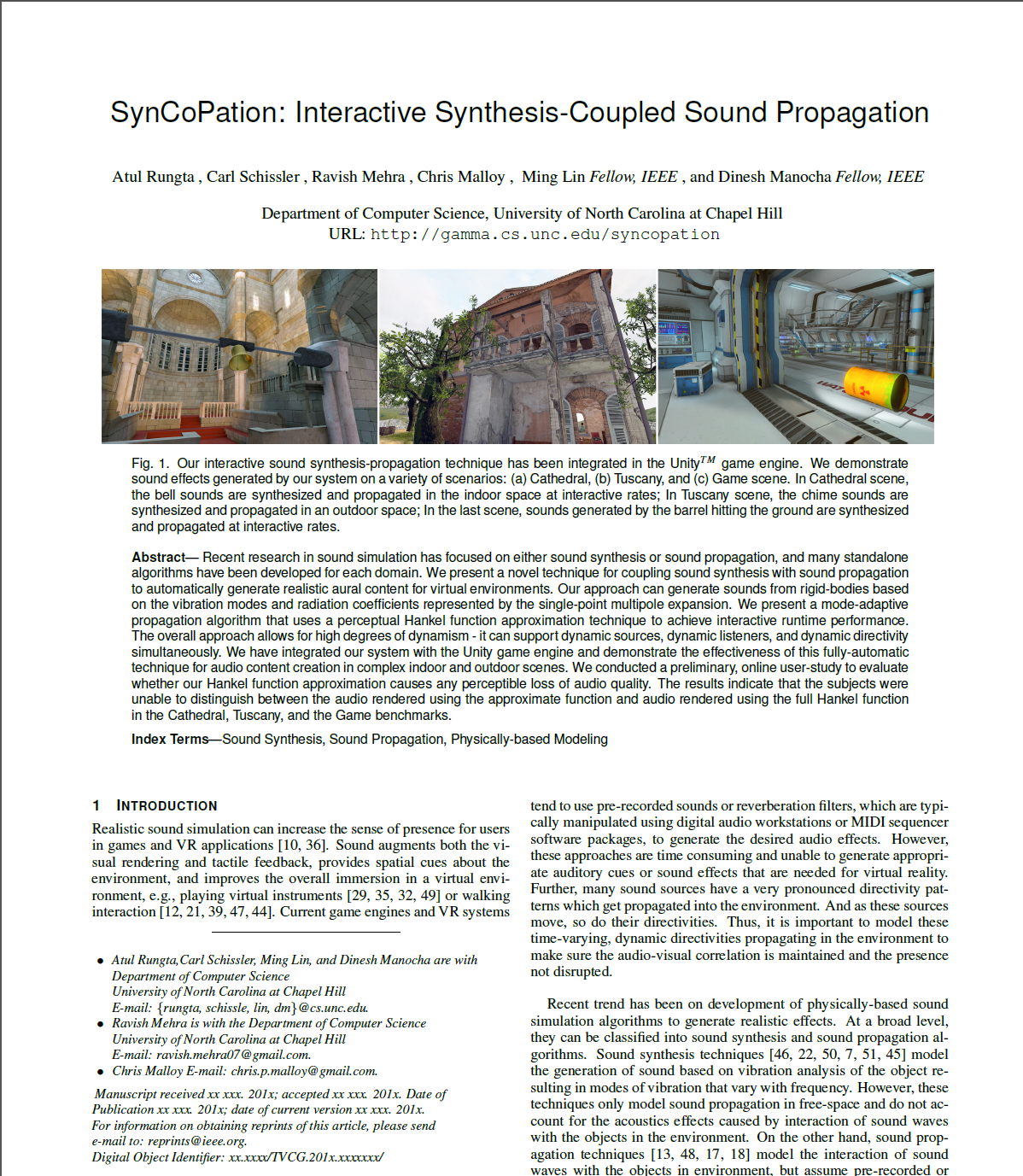

Recent research in sound simulation has focused on either sound synthesis or sound propagation, and many standalone algorithms have been developed for each domain. We present a novel technique for coupling sound synthesis with sound propagation to automatically generate realistic aural content for virtual environments. Our approach can generate sounds from rigid-bodies based on the vibration modes and radiation coefficients represented by the single-point multipole expansion. We present a mode-adaptive propagation algorithm that uses a perceptual Hankel function approximation technique to achieve interactive runtime performance. The overall approach allows for high degrees of dynamism - it can support dynamic sources, dynamic listeners, and dynamic directivity simultaneously. We have integrated our system with the Unity game engine and demonstrate the effectiveness of this fully-automatic technique for audio content creation in complex indoor and outdoor scenes. We conducted a preliminary, online user-study to evaluate whether our Hankel function approximation causes any perceptible loss of audio quality. The results indicate that the subjects were unable to distinguish between the audio rendered using the approximate function and audio rendered using the full Hankel function in the Cathedral, Tuscany, and the Game benchmarks.

Atul Rungta, Carl Schissler, Ravish Mehra, Chris Malloy, Ming Lin, and Dinesh Manocha. SynCoPation: Interactive Synthesis-Coupled Sound Propagation

Preprint (PDF, 5.7 MB), (IEEE VR 2016, Proceedings of IEEE TVCG)