Symphony

Symphony: Realtime Physically Based Sound Synthesis for Large Scale Environments

A demonstration of the possibilities for realtime sound synthesis for large environments on today's computers.

Authors: Nikunj Raghuvanshi and

Ming C. Lin

Email: {nikunj, lin} AT cs.unc.edu

Department of Computer Science,

The University of North Carolina at Chapel Hill

ABSTRACT

We present an interactive approach for generating realistic physically-based sounds from rigid-body dynamic simulations. We use spring-mass systems to model each object's local deformation and vibration, which we demonstrate to be an adequate approximation for capturing physical effects such as magnitude of impact forces, location of impact, and rolling sounds. No assumption is made about the mesh connectivity or topology. Surface meshes used for rigid-body dynamic simulation are utilized for sound simulation without any modifications. We use results in auditory perception and a novel priority-based quality scaling scheme to enable the system to meet variable, stringent time constraints in a real-time application, while ensuring minimal reduction in the perceived sound quality. With this approach, we have observed up to an order of magnitude speed-up compared to an implementation without the acceleration. As a result, we are able to simulate moderately complex simulations with upto hundreds of sounding objects at over 100 frames per second (FPS), making this technique well suited for interactive applications like games and virtual environments. Furthermore, we utilize OpenAL and EAX on Creative Sound Blaster Audigy 2 cards for fast hardware-accelerated propagation modeling of the synthesized sound.

Related Publication

"Interactive Sound Synthesis for Large Scale Environments," Nikunj Raghuvanshi and Ming C. Lin, In Proceedings of ACM I3D, California, March 2006

Screenshots

Realism: Numerous dice fall on a three-octave xylophone in close succession, playing out the song "The Entertainer" (see the video). Our algorithm is able to produce the corresponding musical tones at more than 500 FPS for this complex scene, with audio generation taking 10% of the total CPU time, on a 3.4GHz Pentium-4 Laptop with 1GB RAM.

Complex Environments: More than 100 metallic rings fall onto a wooden table. Each ring is treated as an aurally separate object. All the rings and the table are sounding. The audio simulation runs at more than 200 FPS, the application frame rate being 100 FPS. Quality Scaling ensures that the perceived sound quality does not degrade, while ensuring steady frame rates (See next figure)

Performance

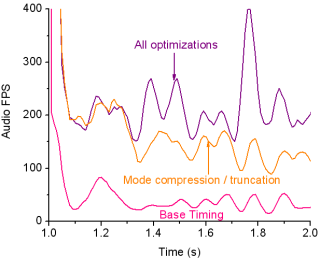

This graph shows the audio simulation FPS for the "rings" scene shown above from time 1s to 2s, during which almost all the collisions take place. The bottom-most plot shows the FPS for an implementation using none of the acceleration techniques described in the paper. The topmost curve shows the FPS with mode compression, mode truncation and quality scaling. Note how the FPS stays near 200 even when the other two curves dip due to numerous collisions during 1.5-2.0s. The video FPS is 100 and an "audio frame" is taken to mean "Sound worth one video frame"

Benefits of Symphony

Acknowledgements